На сайте https://yaustal.com/ для вас собрана коллекция самого разнообразного юмора: от невинных шуток до

Каждый ребенок уникален, и его праздник должен отражать его интересы, мечты и увлечения. Сегодня

Дезинсекция - важная процедура для избавления от насекомых в доме, но ее успешное завершение

Уникальность SMOService заключается в предоставлении комплексных решений для продвижения, подкрепленных высокими стандартами качества и

Приглашение на юбилей – это не просто формальность, а важная часть подготовки к празднику.

Деловой центр в престижном районе Санкт-Петербурга является технологичным пространством. Аренда в БЦ «Т-4» позволит

Популярных парфюмерных брендов, выпускающих женские духи и парфюмированную воду, неизмеримо больше, чем можно перечислить

Покупка Minecraft аккаунта дает доступ к полной версии игры с возможностью сохранения прогресса и

Выбор цветов на свадьбу – задача, требующая не только хорошего вкуса, но и понимания того,

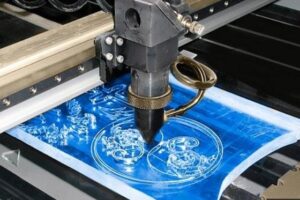

Лазерная гравировка представляет собой современный метод обработки материалов, позволяющий с высокой точностью наносить изображения

Выбор напольного покрытия для ресторана или кафе - это не только вопрос эстетики, но

В Воронеже услуга доставки цветов на дом становится все более популярной, а компания "Лавка

Одежда с принтами стала неотъемлемой частью модного мира, предлагая неограниченные возможности для самовыражения и

Первый шаг – это внешняя очистка. Ее можно осуществлять с помощью мягкой губки или

Каманы - это одно из наиболее старинных сёл Абхазии, и оно имеет особое значение

Аренда мебели становится все более популярным решением для многих, кто ищет временные или гибкие

Голосовые аудио поздравления становятся все более популярным способом выразить свои чувства и пожелания в

Чиангмай - это город в северной части Таиланда, известный как культурный и исторический центр

Эти монеты известны своим уникальным внешним видом: золотой фольгой, которая воссоздаёт эстетику настоящих золотых

Азия с ее обширными просторами, простирающимися от ледяной тундры Сибири до тропических лесов Юго-Восточной

8 марта – Международный женский день, прекрасный повод не только порадовать коллег и сотрудников